Sprachtechnologie und Multilingualität

MT-Team

Wir sind ein Team am MLT-Labor am DFKI, die sich auf neuronale maschinelle Übersetzung (MT) und mehrsprachige Anwendungen spezialisiert hat.

Maschinelle Übersetzung ist der automatisierte Prozess der Umwandlung von Eingaben in einer Sprache in eine andere Sprache. Wir arbeiten sowohl in der Text- als auch in der Gebärdensprachenübersetzung unter Verwendung von Techniken des Deep Learning. Neben der Entwicklung generischer MÜ-Systeme, spezialisieren wir uns auf die maschinelle Übersetzung in Bereichen wie Sprachen mit geringen Ressourcen, hochgradig mehrsprachige Umgebungen oder Dokumenten- und Dialogübersetzung.

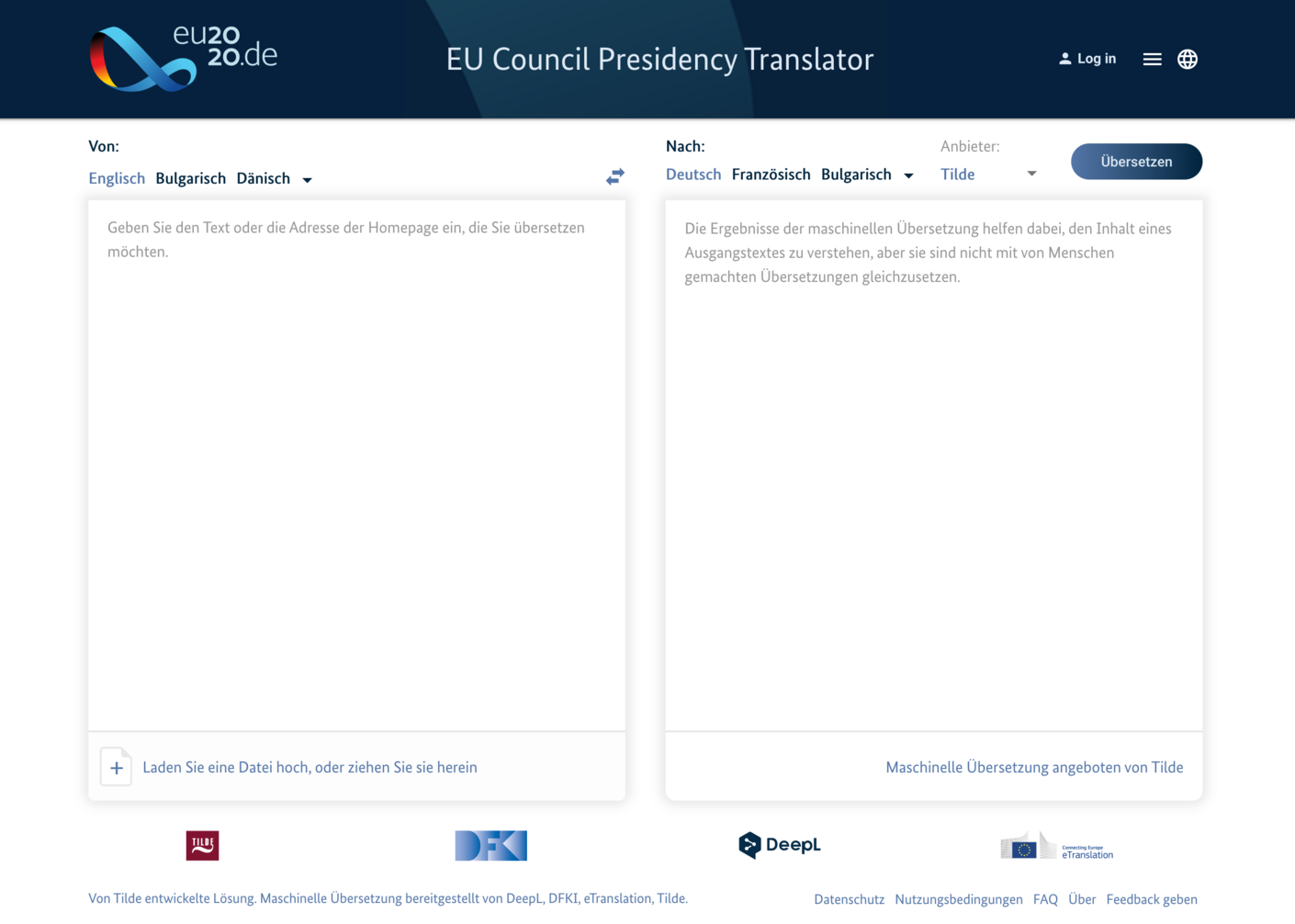

Demo: EU Council Presidency Translator mit DFKI-Übersetzungssystemen

Auswahl aktueller Projekte

AVASAG

Avatar-based language assistant for automated sign translation

Laufzeit: 01.05.2020–30.04.2023, durch BMBF gefördert.

CORA4NLP

Co(n)textual Reasoning and Adaptation for Natural Language Processing

Laufzeit: 01.10.2020-30.09.2023, durch BMBF gefördert.

EUC-PT

EU Council Presidency Translator

Laufzeit: 01.02.2020-31.12.2020, durch das Auswärtige Amt gefördert.

Fair Forward

Beratungsdienste für die Gesellschaft für Internationale Zusammenarbeit (GIZ) zu technischen Aspekten der KI in der internationalen Zusammenarbeit einschließlich Verarbeitung natürlicher Sprache (NLP), Trainingsdaten und Datenzugang für FAIR Forward - Künstliche Intelligenz für alle.

Laufzeit: 01.05.2020–30.06.2021, GIZ Project No. 19.2010.7-003.00

SocialWear

Socially Interactive Smart Fashion

Laufzeit: 01.05.2020–30.04.2024, durch BMBF gefördert.

Projektbeteiligungen D&R / TR

Wir beteiligen uns an Projekten der D&R- und TR-Gruppen, zu denen wir mit maschineller Übersetzung und mehrsprachigen Technologien beitragen:

ELRC

European Language Resource Coordination.

Upcoming shared task on multilingual cultural heritage translation at WMT2021.

NotAs

Multilinguale Notrufassistenz: Unterstützung der Notrufaufnahme durch KI-basierte Sprachverarbeitung

Neural machine translation for dialogues. Funded by BMBF.

Projekte in Zusammenarbeit mit der Universität des Saarlandes

IDEAL

Information Density and Linguistic Encoding

Laufzeit: 01.02.2018–31.01.2022, durch DFG finanziert.

MMT

Multimodal Machine Translation – Convergence of multiple modes of input

Period: 01.03.2019–28.02.2021, funded by SPARC.

Ausgewählte Veröffentlichungen

- Farhad Akhbardeh, Arkady Arkhangorodsky, Magdalena Biesialska, Ondrej Bojar, Rajen Chatterjee, Vishrav Chaudhary, Marta R. Costa-jussa, Cristina España-Bonet, Angela Fan, Christian Federmann, Markus Freitag, Yvette Graham, Roman Grundkiewicz, Barry Haddow, Leonie Harter, Kenneth Heafield, Christopher Homan, Matthias Huck, Kwabena Amponsah-Kaakyire, Jungo Kasai, Daniel Khashabi, Kevin Knight, Tom Kocmi, Philipp Koehn, Nicholas Lourie, Christof Monz, Makoto Morishita, Masaaki Nagata, Ajay Nagesh, Toshiaki Nakazawa, Matteo Negri, Santanu Pal, Allahsera Auguste Tapo, Marco Turchi, Valentin Vydrin and Marcos Zampier. Findings of the 2021 Conference on Machine Translation (WMT21). In Proceedings of the Sixth Conference on Machine Translation (WMT), pages 1-93, Punta Cana (online), November 2021.

- Daria Pylypenko, Kawabena Amponsah-Kaakyire, Koel Dutta Chowdhury, Josef van Genabith, and Cristina España-Bonet (2021) Comparing Feature-Engineering and Feature-Learning Approaches for Multilingual Translationese Classification. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP 2021), Punta Cana, Dominican Republic, pages 8596–8611.

- Jörg Steffen and Josef van Genabith. (2021) TransIns: Document Translation with Markup Reinsertion. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing: System Demonstrations (EMNLP 2021), Punta Cana, Dominican Republic, 28–34.

- Fabrizio Nunnari, Cristina España-Bonet and Eleftherios Avramidis. A Data Augmentation Approach for Sign-language-to-text Translation In-the-wild (BEST POSTER AWARD). Proceedings of the 3rd Conference on Language, Data and Knowledge (LDK2021), Open Access Series in Informatics (OASIcs), Vol. 93, pages 36:1-36:8, September 2021.

- Hongfei Xu, Qiuhui Liu, Josef van Genabith, Deyi Xiong, and Jingyi Zhang. Multi-Head Highly Parallelized LSTM Decoder for Neural Machine Translation. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL-IJCNLP 2021), Bangkok, Thailand, pages 273–282.

- Hongfei Xu, Qiuhui Liu, Josef van Genabith, and Deyi Xiong. Modeling Task-Aware MIMO Cardinality for Efficient Multilingual Neural Machine Translation. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL-IJCNLP 2021), Bangkok, Thailand, pages 361– 367.

- Jingyi Zhang and Josef van Genabith. A Bidirectional Transformer Based Alignment Model for Unsupervised Word Alignment. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL-IJCNLP 2021), Bangkok, Thailand, pages 283–292.

- Dana Ruiter, Dietrich Klakow, Josef van Genabith, Cristina España-Bonet. Integrating Unsupervised Data Generation into Self-Supervised Neural Machine Translation for Low-Resource Languages

- The 18th biennial conference of the International Association of Machine Translation, MT Summit XVIII, Vol 1: MT Research Track, pages 76-91, August 16-20, Virtual, 2021.

- Dana Ruiter, Cristina España-Bonet, Josef van Genabith. Self-Induced Curriculum Learning in Self-Supervised Neural Machine Translation. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP-2020), November 2020.

- Jingyi Zhang, Josef van Genabith. Translation Quality Estimation by Jointly Learning to Score and Rank. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP-2020), November 2020.

- Hongfei Xu, Josef van Genabith, Deyi Xiong, Qiuhui Liu. Dynamically Adjusting Transformer Batch Size by Monitoring Gradient Direction Change. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics.Annual Meeting of the Association for Computational Linguistics (ACL-2020) ACL 2020 July 5-10 virtual, Pages 3519-3524, 7/2020.

- Hongfei Xu, Josef van Genabith, Deyi Xiong, Quihui Liu, Jingyi Zhang. Learning Source Phrase Representations for Neural Machine Translation. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Annual Meeting of the Association for Computational Linguistics (ACL-2020) ACL 2020 July 5-10 virtual, Pages 386-396, 7/2020.

- Hongfei Xu, Qiuhui Liu,Josef van Genabith, Deyi Xiong, Jingyi Zhang. Lipschitz Constrained Parameter Initialization for Deep Transformers. In: Proceedings of the 58th AnnualMeeting of the Association for Computational Linguistics. Annual Meeting of the Association for Computational Linguistics (ACL-2020) ACL 2020 July 5-10 virtual, Pages 397-402, 7/2020.

- Jesujoba O. Alabi, Kwabena Amponsah-Kaakyire, David I. Adelani, Cristina España-Bonet. Massive vs. Curated Embeddings for Low-Resourced Languages: the Case of Yorùbá and Twi. In: 12th Language Resources and Evaluation Conference (LREC). International Conference on Language Resources and Evaluation (LREC-2020) May 12-17 Marseille France European Language Resources Association (ELRA) París 2020.

- Marta R. Costa-jussà, Cristina España-Bonet, Pascale Fung, Noah A. Smith. Multilingual and Interlingual Semantic Representations for Natural Language Processing: A Brief Introduction. In: Computational Linguistics (CL) Special Issue: Multilingual and Interlingual Semantic Representations for Natural Language Processing Pages 1-8 MIT Press Cambridge, MA, USA 2020.

- Hongfei Xu, Deyi Xiong, Josef van Genabith, Qiuhui Liu. Efficient Context-Aware Neural Machine Translation with Layer-Wise Weighting and Input-Aware Gating. In: Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence. International Joint Conference on Artificial Intelligence (IJCAI-2020) 29th International Joint Conference on Artificial Intelligence and 17th Pacific Rim International Conference on Artificial Intelligence IJCAI-PRICAI 2020 January 5-10 Virtual, Pages 3933-3940, 7/2020.

MT Mitglieder

Teamleitung:

Dr. Cristina España i Bonet

cristinae@dfki.de

Teammitglieder:

Dr. Cristina España i Bonet

Yasser Hamidullah

Hilfswissenschaftler und Gastwissenschaftler:

Jesujoba Alabi

Kwabena Amponsah-Kaakyire

Niyati Bafna

Koel Dutta Chowdhury, M.Sc.

Damyana Gateva, M.A. B.Sc.

Nora Graichen

Daria Pylypenko, M.Sc.

Dana Ruiter, M.Sc.

Sonal Sannigrahi