EdTec-QBuilder: A Semantic Retrieval Tool for Assembling Vocational Training Exams in German Language

System Demonstration Track @ NAACL 2024

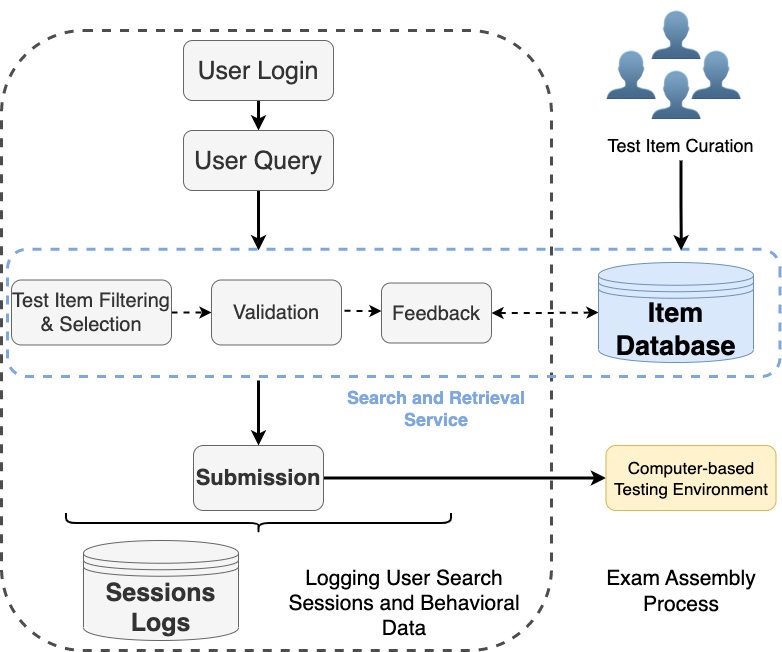

The Exam Assembly Task

In education, retrieving and assembling test items from a previously validated and curated item database into comprehensive examination forms is a real challenge. While a significant focus is on the research and development of models for Automatic Item Generation (AIG), the crucial task of assembling examination forms still needs to be explored. This gap presents an opportunity for research and development, especially in creating effective and efficient methods for assisted and automatic exam assembly.

While collaborating with the bfz group, Germany's leading vocational training provider, we identified the difficulties of curating an item database and filtering relevant test items for building (or assembling) formative examination forms. To address this challenge, we formulated the exam assembling process as an information retrieval task. We implemented and deployed a proprietary search service and tool to assist vocational educators in assembling examination forms. This system facilitates acquiring or crowdsourcing ground-truth expert data from manual exam assembly processes. As a system demonstration, we released EdTec-QBuilder, the public fork of our search service and tool.

System Implementation

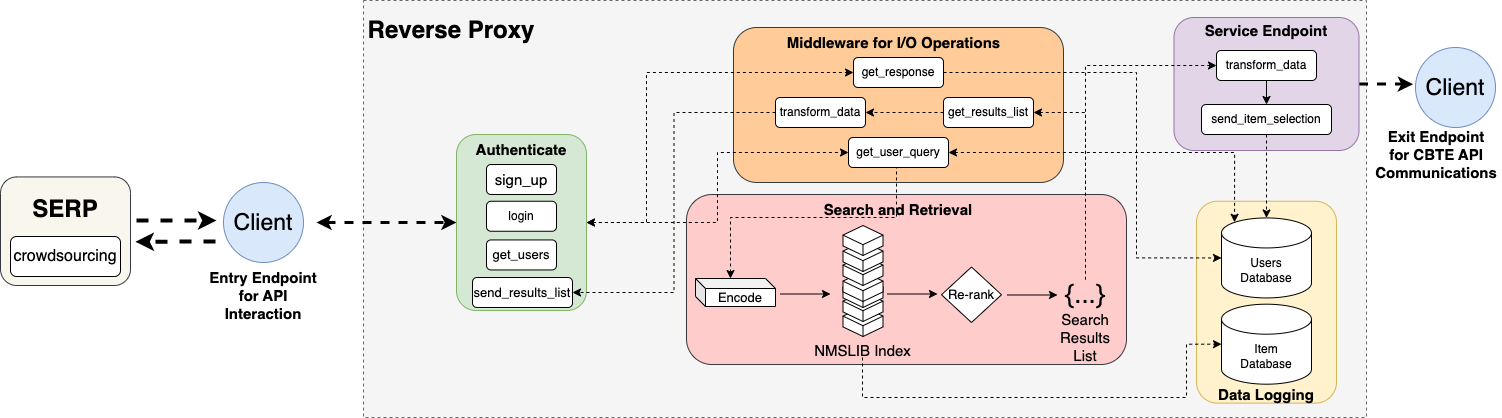

The below figure describes the architecture of the search service. We provide a JSON Web Token-based service to authenticate and serve client requests from the SERP.

After user authentication, the endpoint validates the users' credentials in the user's database. When a user submits a query, the query is encoded into a vector-dense representation to approximate nearest neighbors' search against an NMSLIB precomputed index. To optimize the output of the initial search, the tool's core search module re-ranks the top 100 results with a cross-encoder. Then, it returns a response in JSON format with the resulting test items and their corresponding attributes. The client receives the system's output, and the SERP maps the JSON into a suitable format for the visualization of results. The client then selects relevant items; in the background, the SERP logs the chosen items and sends this data to the web tool.

Search Strategy Selection and Analysis

In this initial phase, as no ground truth data was available and focusing on delivering a functional and practical tool for our commercial partners, we have strategically implemented eight foundational search and retrieval strategies. These strategies serve as initial baselines, carefully chosen to define the core of our test item search and retrieval module. It's important to note that this is an early approximation, designed to evolve and improve as we gain insights and feedback in our ongoing commitment to excellence and innovation.

To overcome the cold start problem, we evaluated and analyzed how the proposed methods performed while interacting with the top 25 popular pre-trained sentence similarity models by employing a synthetic TREC-style methodology. We found that a cross-encoder passage re-ranker performed the best for our task. For more details about our experimental setup, check our relevant publication.

Code

To foster research in information retrieval and assisted and automated exam assembling models, we released the EdTec-QBuilder code. The code is available under Creative Commons Licence.

Dataset

The item database comprises 5,624 test items across 34 high-demand vocational skills in the German job market. We augmented our original item database via ChatGPT3.5 to increase our experiments' robustness and scalability and to share a public version of our dataset. Below, under a Creative Commons Licence, you can download the public fold of the item database, and the anonymized TREC-style search runs the strategies output.

Papers

- Palomino, A., Fischer, A., Kuzilek, J., Nitsch, J., Pinkwart, N., & Paaßen, B. (2024, June). EdTec-QBuilder: A Semantic Retrieval Tool for Assembling Vocational Training Exams in German Language. Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 3: System Demonstrations) (pp. 26-35). URL

- Palomino, A., Fischer, D., Buschhüter, R., Roller, J., Pinkwart, N., & Paaßen, B. (2025, June). Mitigating Bias in Item Retrieval for Enhancing Exam Assembly in Vocational Education Services Proceedings of the 2025 Conference of the North American Chapter of the Association for Computational Linguistics: Industry Track (NAACL). URL

Search & Retrieval Performance Leaderboard

We evaluated our experiments with classic information retrieval metrics. Below, we display the performance leaderboard sorted by nDCG performance. Overall, we tested 176 different pre-trained sentence similarity models and strategy combinations.

| Strategy | ndcg@100 | mrr@100 | precision@100 | recall@100 | f1@100 | map@100 |

|---|---|---|---|---|---|---|

| CE-deutsche-telekom_gbert-large-paraphrase-cosine | 0.517 | 0.709 | 0.312 | 0.539 | 0.379 | 0.289 |

| CE-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.516 | 0.713 | 0.313 | 0.536 | 0.378 | 0.288 |

| CE-0_Transformer | 0.494 | 0.703 | 0.274 | 0.498 | 0.34 | 0.276 |

| CE-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.49 | 0.663 | 0.302 | 0.519 | 0.365 | 0.266 |

| CE-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.477 | 0.723 | 0.277 | 0.473 | 0.335 | 0.258 |

| CE-dell-research-harvard_lt-wikidata-comp-de | 0.476 | 0.691 | 0.267 | 0.483 | 0.331 | 0.26 |

| vertical-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.468 | 0.245 | 0.34 | 0.582 | 0.41 | 0.264 |

| CE-nblokker_debatenet-2-cat | 0.462 | 0.666 | 0.277 | 0.481 | 0.337 | 0.244 |

| CE-aari1995_German_Semantic_STS_V2 | 0.461 | 0.584 | 0.267 | 0.479 | 0.33 | 0.261 |

| vertical-deutsche-telekom_gbert-large-paraphrase-cosine | 0.458 | 0.233 | 0.332 | 0.572 | 0.402 | 0.259 |

| CE-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.452 | 0.769 | 0.27 | 0.444 | 0.322 | 0.244 |

| vertical-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.449 | 0.241 | 0.325 | 0.556 | 0.392 | 0.259 |

| CE-sentence-transformers_LaBSE | 0.441 | 0.731 | 0.259 | 0.432 | 0.31 | 0.235 |

| LM+ANN-deutsche-telekom_gbert-large-paraphrase-cosine | 0.437 | 0.202 | 0.312 | 0.539 | 0.379 | 0.251 |

| vertical-intfloat_multilingual-e5-base | 0.437 | 0.253 | 0.302 | 0.536 | 0.37 | 0.239 |

| vertical-efederici_e5-base-multilingual-4096 | 0.437 | 0.253 | 0.302 | 0.536 | 0.37 | 0.238 |

| CE-setu4993_LaBSE | 0.434 | 0.684 | 0.253 | 0.438 | 0.308 | 0.232 |

| LM+ANN-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.434 | 0.209 | 0.313 | 0.536 | 0.378 | 0.245 |

| vertical-nblokker_debatenet-2-cat | 0.433 | 0.242 | 0.311 | 0.54 | 0.378 | 0.244 |

| vertical-aari1995_German_Semantic_STS_V2 | 0.433 | 0.23 | 0.309 | 0.537 | 0.376 | 0.249 |

| vertical-0_Transformer | 0.432 | 0.243 | 0.307 | 0.543 | 0.376 | 0.232 |

| vertical-dell-research-harvard_lt-wikidata-comp-de | 0.425 | 0.235 | 0.301 | 0.539 | 0.371 | 0.224 |

| LambdaMart-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.425 | 0.328 | 0.313 | 0.536 | 0.378 | 0.176 |

| vertical-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.424 | 0.259 | 0.312 | 0.517 | 0.373 | 0.236 |

| vertical-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.424 | 0.253 | 0.309 | 0.528 | 0.373 | 0.226 |

| LM+ANN-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.424 | 0.242 | 0.302 | 0.519 | 0.365 | 0.242 |

| CE-PM-AI_bi-encoder_msmarco_bert-base_german | 0.418 | 0.728 | 0.229 | 0.389 | 0.276 | 0.229 |

| CE-ef-zulla_e5-multi-sml-torch | 0.415 | 0.635 | 0.257 | 0.44 | 0.309 | 0.201 |

| LambdaMart-deutsche-telekom_gbert-large-paraphrase-cosine | 0.415 | 0.279 | 0.312 | 0.539 | 0.379 | 0.169 |

| CE-efederici_e5-base-multilingual-4096 | 0.411 | 0.591 | 0.248 | 0.436 | 0.302 | 0.193 |

| vertical-ef-zulla_e5-multi-sml-torch | 0.41 | 0.236 | 0.293 | 0.5 | 0.353 | 0.233 |

| CE-intfloat_multilingual-e5-base | 0.41 | 0.58 | 0.247 | 0.433 | 0.301 | 0.194 |

| vertical-sentence-transformers_LaBSE | 0.405 | 0.17 | 0.299 | 0.508 | 0.36 | 0.216 |

| LambdaMart-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.401 | 0.28 | 0.302 | 0.519 | 0.365 | 0.161 |

| vertical-setu4993_LaBSE | 0.398 | 0.229 | 0.295 | 0.501 | 0.356 | 0.201 |

| LambdaMart-0_Transformer | 0.398 | 0.396 | 0.274 | 0.498 | 0.34 | 0.159 |

| MILP-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.397 | 0.267 | 0.313 | 0.536 | 0.378 | 0.154 |

| LM+ANN-0_Transformer | 0.396 | 0.169 | 0.274 | 0.498 | 0.34 | 0.213 |

| CE-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.394 | 0.617 | 0.228 | 0.383 | 0.273 | 0.195 |

| vertical-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.391 | 0.228 | 0.284 | 0.491 | 0.344 | 0.2 |

| LambdaMart-dell-research-harvard_lt-wikidata-comp-de | 0.388 | 0.457 | 0.267 | 0.483 | 0.331 | 0.15 |

| CE-and-effect_musterdatenkatalog_clf | 0.388 | 0.673 | 0.225 | 0.378 | 0.27 | 0.196 |

| MILP-deutsche-telekom_gbert-large-paraphrase-cosine | 0.386 | 0.189 | 0.312 | 0.539 | 0.379 | 0.144 |

| LM+ANN-nblokker_debatenet-2-cat | 0.386 | 0.172 | 0.277 | 0.481 | 0.337 | 0.206 |

| LambdaMart-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.385 | 0.395 | 0.277 | 0.473 | 0.335 | 0.145 |

| LM+ANN-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.385 | 0.199 | 0.277 | 0.473 | 0.335 | 0.201 |

| vertical-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.382 | 0.228 | 0.276 | 0.49 | 0.339 | 0.184 |

| LM+ANN-aari1995_German_Semantic_STS_V2 | 0.382 | 0.155 | 0.267 | 0.479 | 0.33 | 0.208 |

| MILP-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.382 | 0.189 | 0.302 | 0.519 | 0.365 | 0.147 |

| LM+ANN-dell-research-harvard_lt-wikidata-comp-de | 0.381 | 0.159 | 0.267 | 0.483 | 0.331 | 0.196 |

| LambdaMart-aari1995_German_Semantic_STS_V2 | 0.381 | 0.386 | 0.267 | 0.479 | 0.33 | 0.153 |

| LambdaMart-nblokker_debatenet-2-cat | 0.374 | 0.297 | 0.277 | 0.481 | 0.337 | 0.139 |

| LM+ANN-efederici_e5-base-multilingual-4096 | 0.372 | 0.22 | 0.248 | 0.436 | 0.302 | 0.198 |

| vertical-and-effect_musterdatenkatalog_clf | 0.372 | 0.253 | 0.259 | 0.441 | 0.313 | 0.204 |

| LM+ANN-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.371 | 0.236 | 0.27 | 0.444 | 0.322 | 0.202 |

| LM+ANN-ef-zulla_e5-multi-sml-torch | 0.37 | 0.241 | 0.257 | 0.44 | 0.309 | 0.198 |

| LM+ANN-intfloat_multilingual-e5-base | 0.369 | 0.217 | 0.247 | 0.433 | 0.301 | 0.195 |

| LM+ANN-sentence-transformers_LaBSE | 0.362 | 0.179 | 0.259 | 0.432 | 0.31 | 0.197 |

| CE-barisaydin_text2vec-base-multilingual | 0.362 | 0.659 | 0.205 | 0.357 | 0.25 | 0.175 |

| vertical-PM-AI_bi-encoder_msmarco_bert-base_german | 0.361 | 0.221 | 0.263 | 0.45 | 0.318 | 0.182 |

| CE-shibing624_text2vec-base-multilingual | 0.361 | 0.647 | 0.205 | 0.357 | 0.249 | 0.174 |

| MILP-nblokker_debatenet-2-cat | 0.351 | 0.202 | 0.277 | 0.481 | 0.337 | 0.122 |

| MILP-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.351 | 0.274 | 0.277 | 0.473 | 0.335 | 0.122 |

| LM+ANN-setu4993_LaBSE | 0.35 | 0.132 | 0.253 | 0.438 | 0.308 | 0.175 |

| Q-Term-Exp-deutsche-telekom_gbert-large-paraphrase-cosine | 0.35 | 0.192 | 0.253 | 0.427 | 0.304 | 0.182 |

| CE-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.35 | 0.485 | 0.199 | 0.38 | 0.252 | 0.168 |

| vertical-shibing624_text2vec-base-multilingual | 0.347 | 0.196 | 0.238 | 0.408 | 0.288 | 0.188 |

| MILP-0_Transformer | 0.347 | 0.105 | 0.274 | 0.498 | 0.34 | 0.12 |

| vertical-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.347 | 0.225 | 0.251 | 0.418 | 0.299 | 0.183 |

| vertical-barisaydin_text2vec-base-multilingual | 0.346 | 0.196 | 0.237 | 0.406 | 0.287 | 0.187 |

| LambdaMart-sentence-transformers_LaBSE | 0.345 | 0.286 | 0.259 | 0.432 | 0.31 | 0.127 |

| CE-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.345 | 0.594 | 0.199 | 0.355 | 0.244 | 0.167 |

| LambdaMart-ef-zulla_e5-multi-sml-torch | 0.342 | 0.241 | 0.257 | 0.44 | 0.309 | 0.126 |

| LambdaMart-efederici_e5-base-multilingual-4096 | 0.341 | 0.324 | 0.248 | 0.436 | 0.302 | 0.113 |

| MILP-dell-research-harvard_lt-wikidata-comp-de | 0.341 | 0.137 | 0.267 | 0.483 | 0.331 | 0.115 |

| Q-Term-Exp-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.34 | 0.194 | 0.247 | 0.413 | 0.296 | 0.17 |

| LambdaMart-intfloat_multilingual-e5-base | 0.339 | 0.324 | 0.247 | 0.433 | 0.301 | 0.111 |

| Q-Term-Exp-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.339 | 0.199 | 0.24 | 0.405 | 0.289 | 0.183 |

| MILP-aari1995_German_Semantic_STS_V2 | 0.338 | 0.134 | 0.266 | 0.478 | 0.329 | 0.118 |

| MILP-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.337 | 0.251 | 0.27 | 0.444 | 0.322 | 0.122 |

| LambdaMart-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.335 | 0.226 | 0.27 | 0.444 | 0.322 | 0.123 |

| LambdaMart-setu4993_LaBSE | 0.332 | 0.227 | 0.253 | 0.438 | 0.308 | 0.115 |

| TFIDF-W-AVG-deutsche-telekom_gbert-large-paraphrase-cosine | 0.329 | 0.147 | 0.244 | 0.406 | 0.292 | 0.153 |

| CE-setu4993_LEALLA-large | 0.324 | 0.612 | 0.177 | 0.32 | 0.219 | 0.163 |

| LM+ANN-and-effect_musterdatenkatalog_clf | 0.319 | 0.197 | 0.225 | 0.378 | 0.27 | 0.166 |

| TFIDF-W-AVG-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.319 | 0.189 | 0.234 | 0.392 | 0.281 | 0.15 |

| MILP-ef-zulla_e5-multi-sml-torch | 0.319 | 0.231 | 0.257 | 0.44 | 0.309 | 0.106 |

| LM+ANN-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.318 | 0.162 | 0.228 | 0.383 | 0.273 | 0.159 |

| LM+ANN-PM-AI_bi-encoder_msmarco_bert-base_german | 0.316 | 0.156 | 0.229 | 0.389 | 0.276 | 0.149 |

| MILP-efederici_e5-base-multilingual-4096 | 0.315 | 0.247 | 0.248 | 0.436 | 0.302 | 0.094 |

| MILP-intfloat_multilingual-e5-base | 0.314 | 0.249 | 0.247 | 0.433 | 0.301 | 0.094 |

| MILP-sentence-transformers_LaBSE | 0.312 | 0.172 | 0.259 | 0.432 | 0.31 | 0.105 |

| vertical-setu4993_LEALLA-large | 0.312 | 0.167 | 0.243 | 0.402 | 0.29 | 0.146 |

| MILP-setu4993_LaBSE | 0.31 | 0.149 | 0.253 | 0.438 | 0.308 | 0.101 |

| vertical-clips_mfaq | 0.309 | 0.182 | 0.212 | 0.379 | 0.26 | 0.133 |

| LM+ANN-barisaydin_text2vec-base-multilingual | 0.306 | 0.204 | 0.205 | 0.357 | 0.25 | 0.155 |

| LM+ANN-shibing624_text2vec-base-multilingual | 0.306 | 0.204 | 0.205 | 0.357 | 0.249 | 0.156 |

| Q-Term-Exp-aari1995_German_Semantic_STS_V2 | 0.305 | 0.126 | 0.217 | 0.382 | 0.266 | 0.145 |

| Q-Term-Exp-PM-AI_sts_paraphrase_xlm-roberta-base_de-en | 0.302 | 0.144 | 0.217 | 0.377 | 0.264 | 0.144 |

| TFIDF-W-AVG-nblokker_debatenet-2-cat | 0.3 | 0.148 | 0.221 | 0.367 | 0.265 | 0.139 |

| LambdaMart-PM-AI_bi-encoder_msmarco_bert-base_german | 0.299 | 0.214 | 0.229 | 0.389 | 0.276 | 0.097 |

| LambdaMart-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.292 | 0.186 | 0.228 | 0.383 | 0.273 | 0.092 |

| LM+ANN-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.292 | 0.135 | 0.199 | 0.38 | 0.252 | 0.139 |

| LambdaMart-and-effect_musterdatenkatalog_clf | 0.291 | 0.274 | 0.225 | 0.378 | 0.27 | 0.094 |

| Q-Term-Exp-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.29 | 0.146 | 0.209 | 0.348 | 0.25 | 0.137 |

| TFIDF-W-AVG-sentence-transformers_paraphrase-multilingual-mpnet-base-v2 | 0.288 | 0.253 | 0.193 | 0.32 | 0.231 | 0.149 |

| Q-Term-Exp-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.287 | 0.11 | 0.212 | 0.384 | 0.262 | 0.115 |

| TFIDF-W-AVG-dell-research-harvard_lt-wikidata-comp-de | 0.286 | 0.159 | 0.211 | 0.352 | 0.253 | 0.122 |

| LM+ANN-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.286 | 0.165 | 0.199 | 0.355 | 0.244 | 0.131 |

| TFIDF-W-AVG-deutsche-telekom_gbert-large-paraphrase-euclidean | 0.284 | 0.174 | 0.207 | 0.35 | 0.25 | 0.12 |

| Q-Term-Exp-0_Transformer | 0.281 | 0.133 | 0.201 | 0.353 | 0.245 | 0.118 |

| TFIDF-W-AVG-setu4993_LaBSE | 0.281 | 0.149 | 0.198 | 0.333 | 0.238 | 0.134 |

| MILP-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.281 | 0.198 | 0.228 | 0.383 | 0.273 | 0.082 |

| CE-clips_mfaq | 0.28 | 0.543 | 0.151 | 0.274 | 0.187 | 0.118 |

| Q-Term-Exp-and-effect_musterdatenkatalog_clf | 0.279 | 0.172 | 0.195 | 0.337 | 0.237 | 0.127 |

| Q-Term-Exp-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.275 | 0.144 | 0.195 | 0.336 | 0.237 | 0.129 |

| TFIDF-W-AVG-0_Transformer | 0.274 | 0.182 | 0.199 | 0.331 | 0.239 | 0.114 |

| MILP-PM-AI_bi-encoder_msmarco_bert-base_german | 0.274 | 0.11 | 0.229 | 0.389 | 0.276 | 0.08 |

| TFIDF-W-AVG-aari1995_German_Semantic_STS_V2 | 0.273 | 0.139 | 0.193 | 0.337 | 0.236 | 0.115 |

| LambdaMart-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.273 | 0.21 | 0.199 | 0.38 | 0.252 | 0.086 |

| Q-Term-Exp-shibing624_text2vec-base-multilingual | 0.27 | 0.132 | 0.189 | 0.337 | 0.233 | 0.12 |

| Q-Term-Exp-barisaydin_text2vec-base-multilingual | 0.269 | 0.132 | 0.188 | 0.334 | 0.231 | 0.121 |

| LambdaMart-barisaydin_text2vec-base-multilingual | 0.269 | 0.271 | 0.205 | 0.357 | 0.25 | 0.079 |

| MILP-and-effect_musterdatenkatalog_clf | 0.267 | 0.16 | 0.225 | 0.378 | 0.27 | 0.077 |

| TFIDF-W-AVG-symanto_sn-xlm-roberta-base-snli-mnli-anli-xnli | 0.266 | 0.143 | 0.195 | 0.322 | 0.233 | 0.116 |

| LambdaMart-shibing624_text2vec-base-multilingual | 0.264 | 0.21 | 0.205 | 0.357 | 0.249 | 0.077 |

| LambdaMart-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.262 | 0.211 | 0.199 | 0.355 | 0.244 | 0.078 |

| Q-Term-Exp-intfloat_multilingual-e5-base | 0.261 | 0.149 | 0.179 | 0.306 | 0.216 | 0.127 |

| Q-Term-Exp-nblokker_debatenet-2-cat | 0.261 | 0.14 | 0.18 | 0.327 | 0.224 | 0.115 |

| TFIDF-W-AVG-sentence-transformers_LaBSE | 0.259 | 0.119 | 0.188 | 0.324 | 0.229 | 0.108 |

| MILP-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.259 | 0.187 | 0.199 | 0.38 | 0.252 | 0.077 |

| Q-Term-Exp-sentence-transformers_LaBSE | 0.259 | 0.08 | 0.193 | 0.324 | 0.232 | 0.116 |

| Q-Term-Exp-dell-research-harvard_lt-wikidata-comp-de | 0.259 | 0.123 | 0.186 | 0.319 | 0.225 | 0.109 |

| TFIDF-W-AVG-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.257 | 0.122 | 0.179 | 0.303 | 0.216 | 0.128 |

| Q-Term-Exp-efederici_e5-base-multilingual-4096 | 0.257 | 0.15 | 0.177 | 0.301 | 0.213 | 0.124 |

| Q-Term-Exp-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.255 | 0.106 | 0.184 | 0.36 | 0.236 | 0.098 |

| Q-Term-Exp-ef-zulla_e5-multi-sml-torch | 0.254 | 0.135 | 0.177 | 0.306 | 0.214 | 0.12 |

| MILP-shibing624_text2vec-base-multilingual | 0.25 | 0.112 | 0.205 | 0.357 | 0.249 | 0.07 |

| MILP-barisaydin_text2vec-base-multilingual | 0.249 | 0.101 | 0.205 | 0.357 | 0.25 | 0.07 |

| MILP-sentence-transformers_distiluse-base-multilingual-cased-v1 | 0.249 | 0.215 | 0.199 | 0.355 | 0.244 | 0.069 |

| CE-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.246 | 0.574 | 0.137 | 0.221 | 0.162 | 0.1 |

| Q-Term-Exp-PM-AI_bi-encoder_msmarco_bert-base_german | 0.244 | 0.113 | 0.175 | 0.303 | 0.213 | 0.105 |

| TFIDF-W-AVG-sentence-transformers_distiluse-base-multilingual-cased-v2 | 0.244 | 0.117 | 0.175 | 0.303 | 0.213 | 0.108 |

| TFIDF-W-AVG-shibing624_text2vec-base-multilingual | 0.243 | 0.16 | 0.167 | 0.289 | 0.203 | 0.105 |

| LM+ANN-setu4993_LEALLA-large | 0.239 | 0.099 | 0.177 | 0.32 | 0.219 | 0.101 |

| TFIDF-W-AVG-barisaydin_text2vec-base-multilingual | 0.237 | 0.161 | 0.163 | 0.28 | 0.198 | 0.101 |

| TFIDF-W-AVG-LLukas22_paraphrase-multilingual-mpnet-base-v2-embedding-all | 0.233 | 0.224 | 0.167 | 0.273 | 0.199 | 0.101 |

| LM+ANN-clips_mfaq | 0.23 | 0.168 | 0.151 | 0.274 | 0.187 | 0.088 |

| LambdaMart-setu4993_LEALLA-large | 0.228 | 0.182 | 0.177 | 0.32 | 0.219 | 0.066 |

| TFIDF-W-AVG-PM-AI_bi-encoder_msmarco_bert-base_german | 0.227 | 0.081 | 0.165 | 0.282 | 0.2 | 0.095 |

| MILP-setu4993_LEALLA-large | 0.225 | 0.234 | 0.177 | 0.32 | 0.219 | 0.065 |

| TFIDF-W-AVG-intfloat_multilingual-e5-base | 0.216 | 0.109 | 0.159 | 0.261 | 0.189 | 0.086 |

| TFIDF-W-AVG-efederici_e5-base-multilingual-4096 | 0.21 | 0.117 | 0.157 | 0.262 | 0.189 | 0.078 |

| LambdaMart-clips_mfaq | 0.207 | 0.14 | 0.151 | 0.274 | 0.187 | 0.054 |

| TFIDF-W-AVG-ef-zulla_e5-multi-sml-torch | 0.205 | 0.109 | 0.147 | 0.243 | 0.176 | 0.09 |

| CE-meta-llama_Llama-2-7b-chat-hf | 0.205 | 0.55 | 0.108 | 0.186 | 0.131 | 0.088 |

| vertical-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.203 | 0.174 | 0.144 | 0.246 | 0.173 | 0.067 |

| TFIDF-W-AVG-clips_mfaq | 0.2 | 0.173 | 0.135 | 0.241 | 0.167 | 0.079 |

| Q-Term-Exp-setu4993_LEALLA-large | 0.196 | 0.073 | 0.143 | 0.247 | 0.174 | 0.072 |

| Q-Term-Exp-meta-llama_Llama-2-7b-chat-hf | 0.191 | 0.083 | 0.127 | 0.238 | 0.16 | 0.077 |

| TFIDF-W-AVG-and-effect_musterdatenkatalog_clf | 0.186 | 0.094 | 0.137 | 0.22 | 0.162 | 0.07 |

| MILP-clips_mfaq | 0.186 | 0.125 | 0.151 | 0.274 | 0.187 | 0.039 |

| LM+ANN-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.177 | 0.091 | 0.137 | 0.221 | 0.162 | 0.057 |

| Q-Term-Exp-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.177 | 0.131 | 0.13 | 0.214 | 0.156 | 0.073 |

| vertical-meta-llama_Llama-2-7b-chat-hf | 0.175 | 0.176 | 0.13 | 0.228 | 0.159 | 0.064 |

| Q-Term-Exp-setu4993_LaBSE | 0.168 | 0.145 | 0.111 | 0.202 | 0.138 | 0.059 |

| LambdaMart-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.165 | 0.1 | 0.137 | 0.221 | 0.162 | 0.04 |

| MILP-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.157 | 0.105 | 0.137 | 0.221 | 0.162 | 0.035 |

| TFIDF-W-AVG-LLukas22_all-MiniLM-L12-v2-embedding-all | 0.15 | 0.12 | 0.109 | 0.175 | 0.128 | 0.046 |

| LM+ANN-meta-llama_Llama-2-7b-chat-hf | 0.149 | 0.16 | 0.108 | 0.186 | 0.131 | 0.053 |

| LambdaMart-meta-llama_Llama-2-7b-chat-hf | 0.14 | 0.123 | 0.108 | 0.186 | 0.131 | 0.034 |

| Q-Term-Exp-clips_mfaq | 0.136 | 0.089 | 0.093 | 0.175 | 0.117 | 0.04 |

| MILP-meta-llama_Llama-2-7b-chat-hf | 0.126 | 0.04 | 0.108 | 0.186 | 0.131 | 0.028 |

| TFIDF-W-AVG-meta-llama_Llama-2-7b-chat-hf | 0.105 | 0.068 | 0.076 | 0.137 | 0.094 | 0.032 |

| TFIDF-W-AVG-setu4993_LEALLA-large | 0.097 | 0.044 | 0.079 | 0.131 | 0.094 | 0.016 |

| bm25 | 0.075 | 0.124 | 0.053 | 0.09 | 0.063 | 0.012 |